PDF version of this entire document

PDF version of this entire document

In this chapter, the Kotcheff and Taylor-inspired objective function (already introduced in Chapter 4) is used because it is closely related to MDL whilst being simple to implement, but this time it is applied to images. The objective function chosen here relies on an implicit similarity measure, which is an approximation of the quality of a model. This model is a combined model of shape and intensity and the way the two are combined relies on a weighting factor which will be explored later. The derivation of combined models was explained in Chapter 3 and in this 1-D case, the combined model is built by taking all the images, taking all the shapes, and fusing the properties of each such that both components are simultaneously kept in check.

To repeat a key argument, any set of unregistered images can be used to build such a model, but only a properly registered set of images - that which results in high groupwise similarity - builds a good model. This observation can be exploited to create a similarity measure that not only deals with pairs of images, but can also deal with large sets.

Similarity is computed indirectly in this case. The algorithm does

so by calculating the complexity of a combined model of shape and

intensity, namely by looking at the covariance matrix of that model.

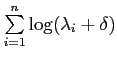

To efficiently evaluate model complexity,

is obtained where

is obtained where

![]() are the

are the ![]() eigenvalues of the

covariance matrix whose magnitudes are the greatest and

eigenvalues of the

covariance matrix whose magnitudes are the greatest and ![]() is a small constant (0.01) which adds weight to each eigenvalue (following

Kotcheff and Taylor). This approximates

is a small constant (0.01) which adds weight to each eigenvalue (following

Kotcheff and Taylor). This approximates

This algorithm is related to the approach of Kotcheff et al. and it makes the registration purely model-driven so that no reference image is required. The objective function leads to one distinct solution without dependence upon individual images. This resolves the recurring issue of having to select a reference image and treat the problem as if it relies primarily on that one image.

This approach takes into account pixel values and shapes for whole images, so it may be prone to suffering from limitations such as lack of consideration for localised differences. It may also treat incorrectly registered images as though their model is ``good'' because it considers the model data alone and good alignment need not be the correct one. In practice, however, evidence suggests that these issues are not encountered, at least not with the data which is handled here.

Given an approximation of model quality and a method for manipulating warps, a general optimiser (general-purpose Nelder-Mead optimiser in this case) is used to find sets of warp parameters which maximise the quality of the model. In the next section, a couple of methods are described which can assist the optimisation by having it focus on interesting parts of the problem or by reducing the complexity of the problem, in which case the optimisation can be made quicker. Specific details about the optimisers will be given in the next section as well.

Roy Schestowitz 2010-04-05