he GIMP is an extremely powerful program and animation in the GIMP can be achieved with layers. That’s why I can get so much work done just with Free software and GNU tools like

he GIMP is an extremely powerful program and animation in the GIMP can be achieved with layers. That’s why I can get so much work done just with Free software and GNU tools like gnuplot. This includes some of the following new examples, which are done by taking screenshots (from the GIMP), stacking them up as layers, and then saving them as a GIF file with the animation option enabled.

The following file, an animated GIF image which comprises 8 frames with 600ms setting them apart (click the image to zoom), shows my new algorithm tracking the heart’s boundaries in a sequence taken from the same slice, which prevents sudden, unregistered change in the intensity values near the point being probed. 16 points are initially fixed in a region, equally spaced although that is not a strict requirement because the program takes any list of points and handles each one in turn. The points are separated by a 5-pixel distance in the x and y axes although that too can vary independently based on parameter input that sets a grid (better initialisation would look for edges of interest). The shown arrows are narrow and crude although an option exists for making them thick (which then hides interesting parts of the image). The shuffle parameters in this case involve a frame size of 9×9 pixels, a shuffle radius of 5 pixels, and a summation of pixel-wise differences.

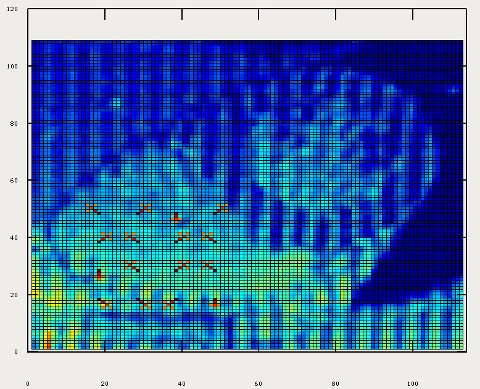

A colour representation of the same images sequence is shown below (click to zoom).

Another newly-implemented method looks at another measure for a window of pixels taken from consecutive frames. It considers a minimum of pixel-wise differences in a given range rather than take the average difference. The results obtained using each of these methods cannot be easily compared without a simplified synthetic set and a proper study would need to involve systematic experiments that look at how varying window size, radius, and calculation method affects overall performance. There is quite a project right there, but it only involves tracking, with or without tagging. Tags can help in identifying good landmark points to start with — points that define an anatomically-meaningful edge to start from and track as the sequence of frames progresses. Eventually, providing a formula for normalising a measure of similarity would be nice. Such a normalisation method — if properly applied — gives a new way of finding landmark points ‘on the move’. Doing so using non-rigid registration (NRR) is not possible for the heart as there is hardly a one-to-one correspondence between points (too much movement). The hope is that by employing a fast tracking algorithm with a good transform which adjusts itself for image intensity, size et cetera, it will be possible to identify edges and perform localised measurements, perhaps even do statistical analysis based on different sets of videos (long-term goal). These ideas are not far fetched and the body of work already done in this area ought to be explored. The novelty is the transform being used to quickly calculate similarity wrt neighbouring points, so existing work look at how to adjust parameters so as to get better results. In this particular problem domain, tracking the heart’s contours reliably and robustly enough is the objective, however it is not simple to do this ‘on he fly’ (speed of video playback for example).

oday I implemented circular arrangement of landmark points for the algorithm to identify something approximate/similar to the shape of the heart and then place a given number of points around there. In addition, a boundary is shown by sampling between those points, which gives a contour, with or without arrows on top of it. I will upload the code shortly (needs tidying up).

oday I implemented circular arrangement of landmark points for the algorithm to identify something approximate/similar to the shape of the heart and then place a given number of points around there. In addition, a boundary is shown by sampling between those points, which gives a contour, with or without arrows on top of it. I will upload the code shortly (needs tidying up).

Filed under:

Filed under:

ather than use the

ather than use the

EAKS are often just material which was supposed to be in the public domain all along. Copyright is not the issue. Since it was kept secret (often for no truly justifiable reason), input which was contained in it assumed no moderation would be needed. As such, secrecy resulted in mischief, rudeness, and often the perpetuation of misconduct, which relied on lack of wider awareness.

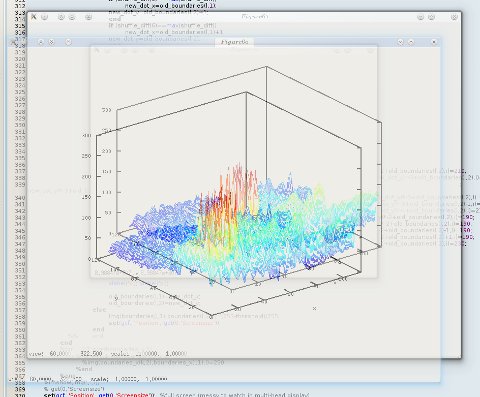

EAKS are often just material which was supposed to be in the public domain all along. Copyright is not the issue. Since it was kept secret (often for no truly justifiable reason), input which was contained in it assumed no moderation would be needed. As such, secrecy resulted in mischief, rudeness, and often the perpetuation of misconduct, which relied on lack of wider awareness. HAD worries that GNU Octave would not support some of the advanced graphing functionality of MATLAB, but with the help of tools like gnuplot, Octave stays on par in this game (bar some OpenGL enhancements). Much to my surprise, the 3-D charting and graphing software in GNU Octave. Here are some visualisations of cardiac images I work with.

HAD worries that GNU Octave would not support some of the advanced graphing functionality of MATLAB, but with the help of tools like gnuplot, Octave stays on par in this game (bar some OpenGL enhancements). Much to my surprise, the 3-D charting and graphing software in GNU Octave. Here are some visualisations of cardiac images I work with.