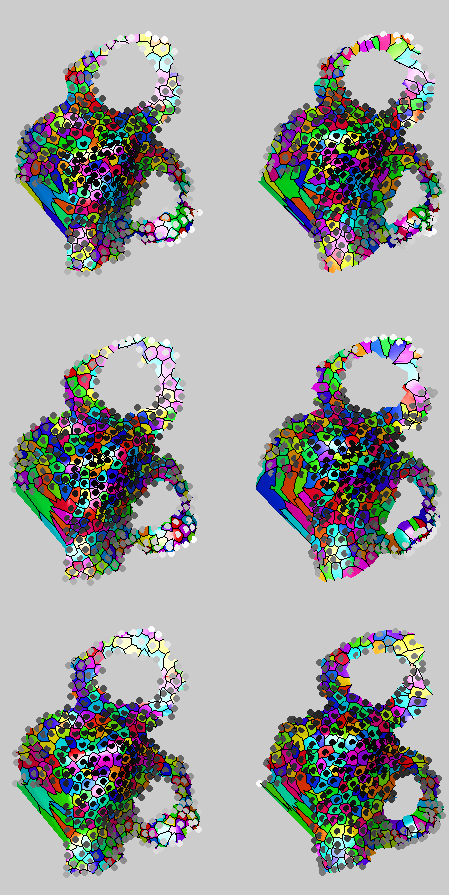

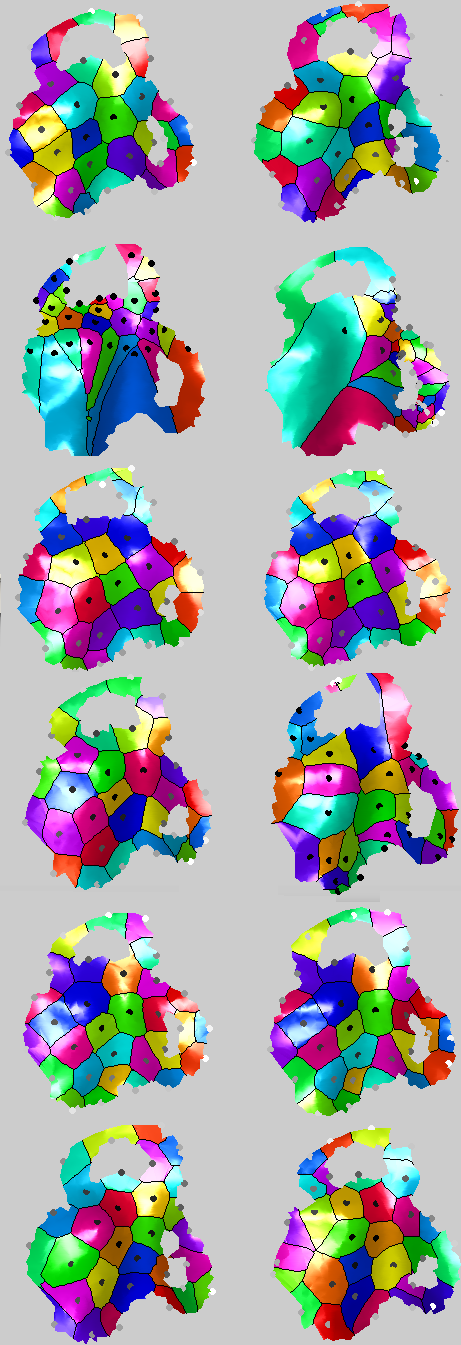

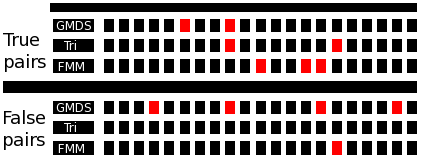

sing a more brute force approach which takes into account a broader stochastic process, performance seems to have improved to the point where for 50 pairs (100 images) there are just 2 mistakes using the FMM-based approach and 1 using the triangles counting approach. This seems to have some real potential, even though it is slow for the time being (partly because 4 methods are being tested at the same time, including two GMDS-based approaches).

sing a more brute force approach which takes into account a broader stochastic process, performance seems to have improved to the point where for 50 pairs (100 images) there are just 2 mistakes using the FMM-based approach and 1 using the triangles counting approach. This seems to have some real potential, even though it is slow for the time being (partly because 4 methods are being tested at the same time, including two GMDS-based approaches).

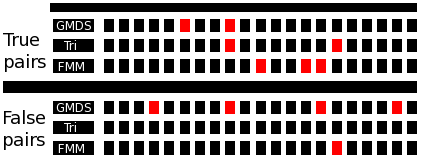

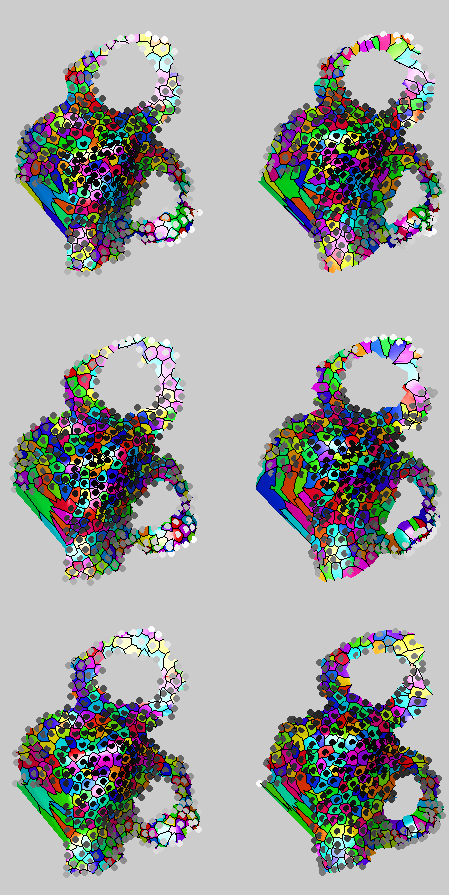

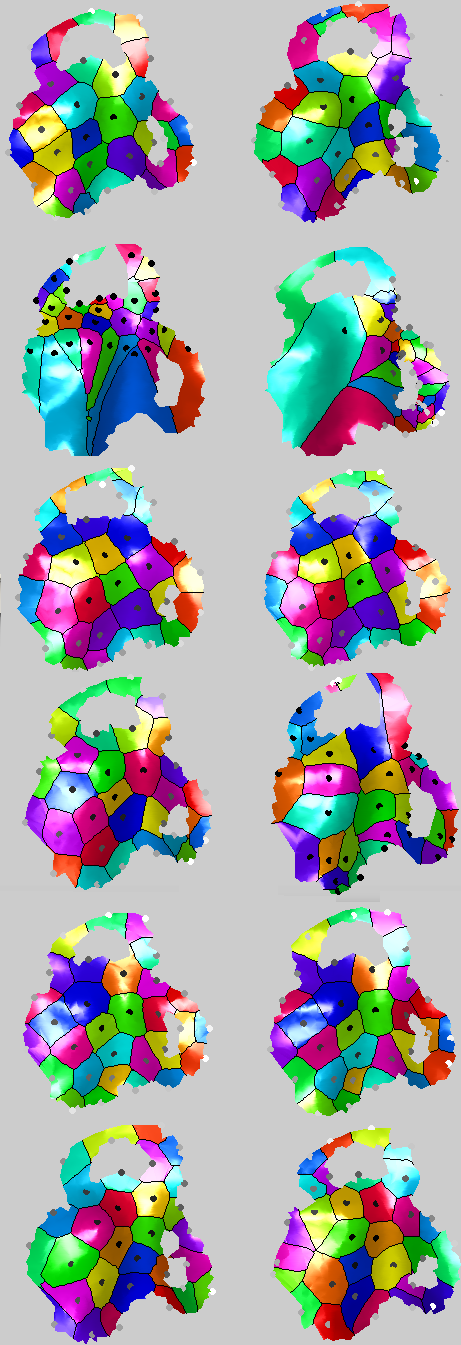

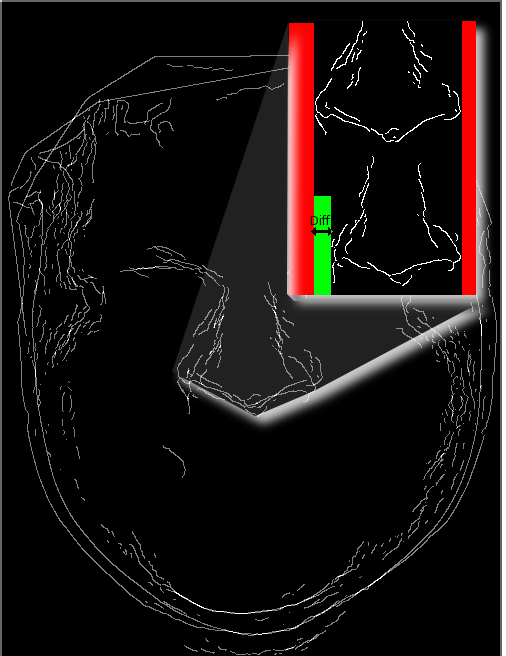

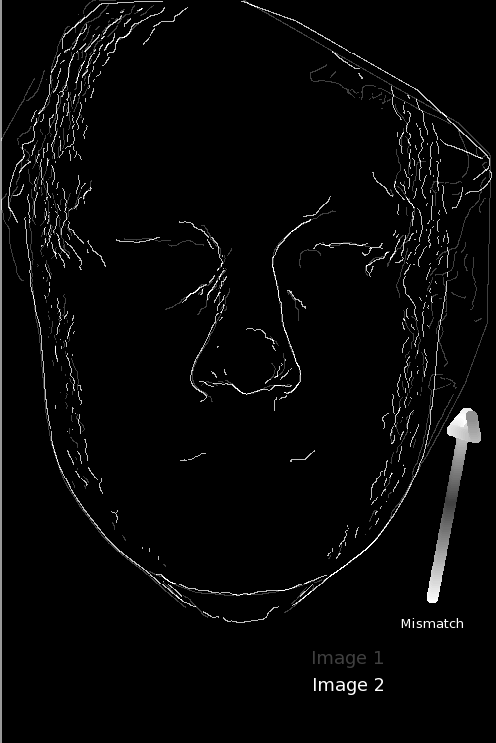

I then restarted the experiment with 4 times more points, 84 images, and I’ll run it for longer. At the start there were no mistakes, but it’s slow. The purpose of this long experiment to see if length of exploration and use of numerous methods at once can yield a better comparator. In the following diagram, red indicates wrong classification. Since similarity is measured in 3 different ways, there is room for classification based on a majority, in which case only one mistake is made. It’s the 9th comparison of the true pairs, which is shown as well. The mouth and arguably whole lower part of the face is a bit twisted; the FMM-based approach got it right, but the other two failed. Previously, when the process was faster, the results were actually better.

Scatter alternations were made to investigate the potential of yet more brute force. I reran the experiments as before but with different parameters that scatter the random sample closer to the centre of the face and this eliminated the one mistake made before (9th true pairs). The changes resulted in one single result which was not correct: the 15th image in the other gallery. Whereas it was previously intuitive to find a fix for one mistake. when this fix introduces a mistake elsewhere it’s time to think of an approach change. One solution might be to increase the scatter sample/range, but it is already very slow as it is.

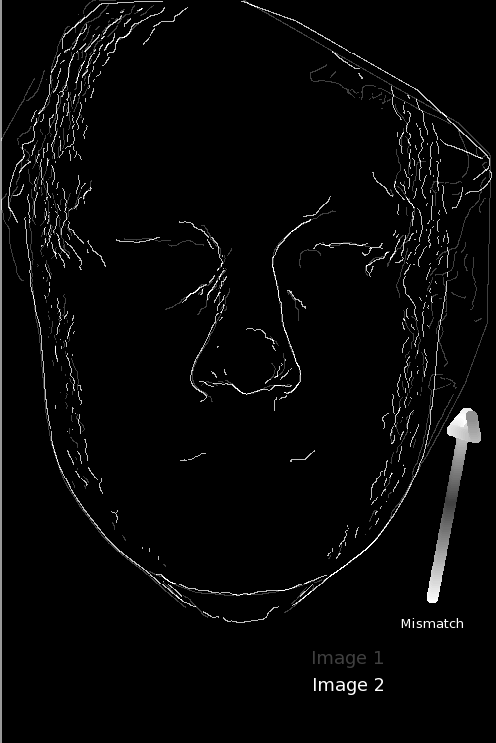

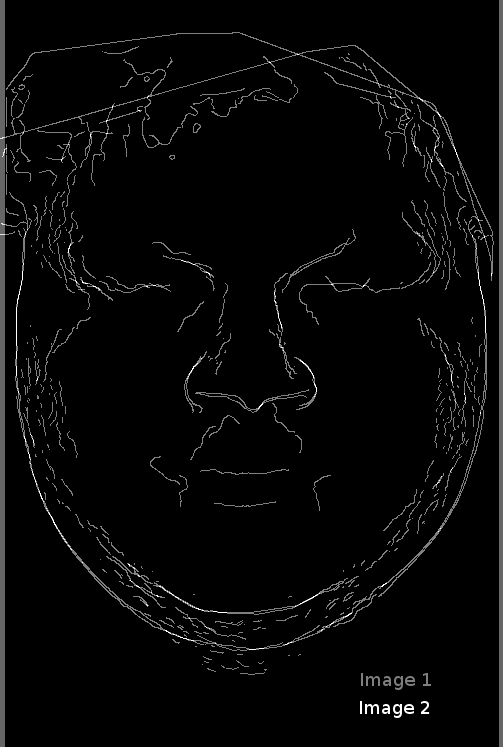

Edge detection was then explored as another classifier facilitator.

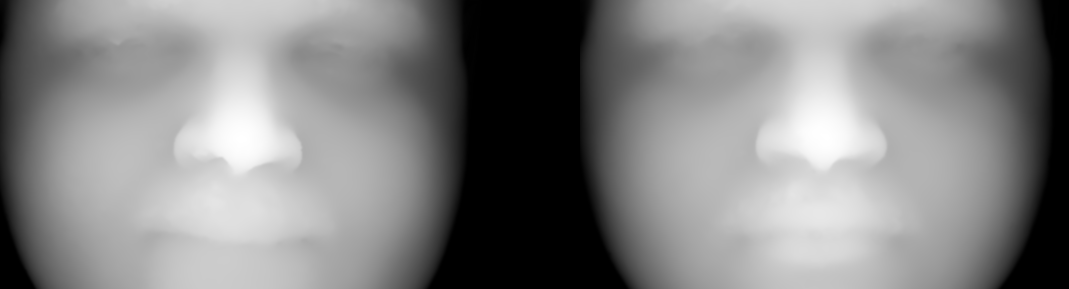

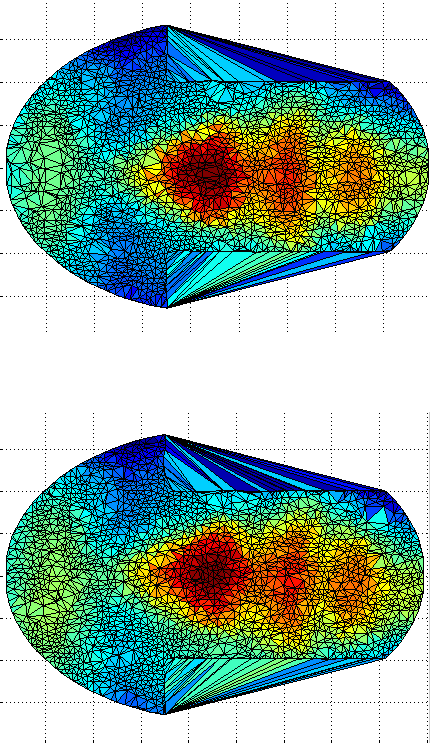

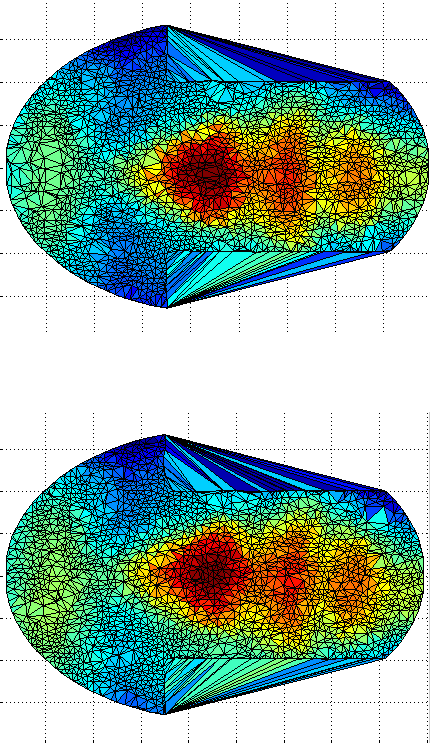

In order to address the recurring issue where misclassifications are caused by improper account for details versus topology, another approach is going to be implemented and added to the stack of methods already in use. The approach will use edge detection near anatomically distinct features and then perform measurements based on the output. As the image below shows, GMDS is still inclined to accept false pairs as though they are matching sometimes and this weakens the GMDS “best fit” approach.

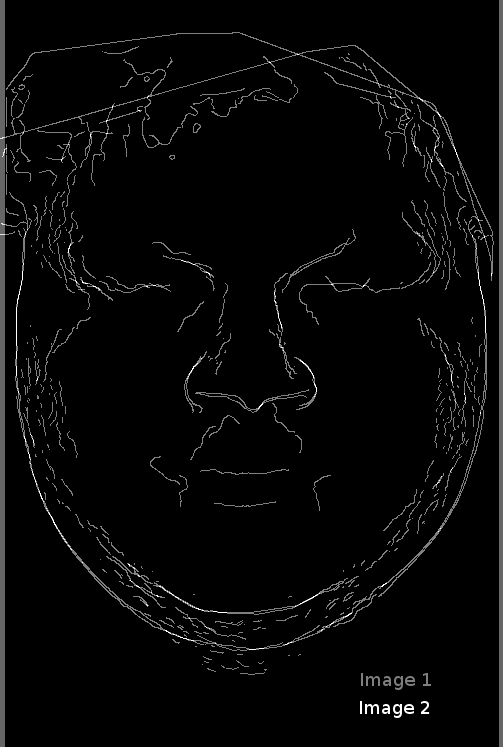

I have implemented a 3-D classification method based on filters and Canny edge detector, essentially measuring distances on the surface — distances between edges. So far, based on 20 or so comparisons, there are no errors. But ultimately, this can be used as one classifier among several.

The thing is about Canny is, if we do that, we might as try using the set

Laplacian(I) - g(I)*div(g(I)/|g(I)|) = 0

where g(I) = grad (I) which is the Haralick part of the “Canny” edge detector, i.e. without the hysteresis integration.

I decided to look into changing it. Currently I use:

% Magic numbers

PercentOfPixelsNotEdges = .7; % Used for selecting thresholds

ThresholdRatio = .4; % Low thresh is this fraction of the high.

% Calculate gradients using a derivative of Gaussian filter

[dx, dy] = smoothGradient(a, sigma);

% Calculate Magnitude of Gradient

magGrad = hypot(dx, dy);

% Normalize for threshold selection

magmax = max(magGrad(:));

if magmax > 0

magGrad = magGrad / magmax;

end

% Determine Hysteresis Thresholds

[lowThresh, highThresh] = selectThresholds(thresh, magGrad, PercentOfPixelsNotEdges, ThresholdRatio, mfilename);

% Perform Non-Maximum Suppression Thining and Hysteresis Thresholding of Edge

% Strength

e = thinAndThreshold(e, dx, dy, magGrad, lowThresh, highThresh);

thresh = [lowThresh highThresh];

There is a lot that we can do with edges to complement the FMM-based classifiers (triangles count, GMDS, others), but moreover, I am thinking of placing markers on edges/corners (derived from range images) and then calculating geodesics between those. Right now it is all Euclidean, without account for spatial properties like curves in the vicinity. By choosing many points and repeating the process everything slows down, but previous experiments show this to bear potential. None of it is multi-scale just yet.

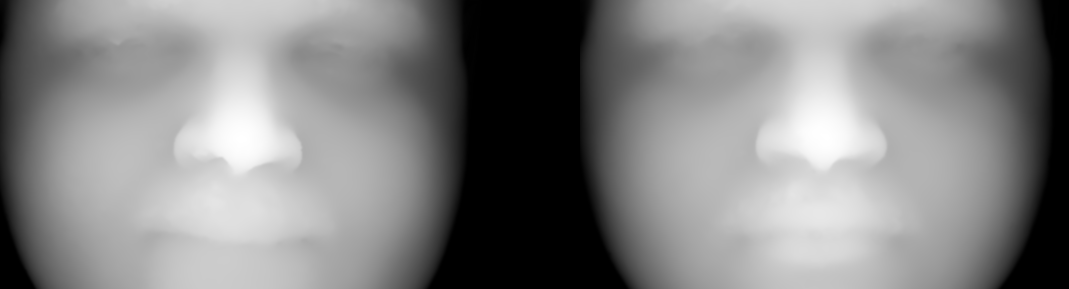

What we do with the edges is also risky, as the edges strongly depend on the pose estimation and expression. In pose-corrected pairs (post-ICP) I measure distances between face edges and nose edges. Other parts are too blurry and don’t give sharp enough an edge which is also resistant to expression. The nose is also surrounded by a rigid surface (pose-agnostic for the most part).

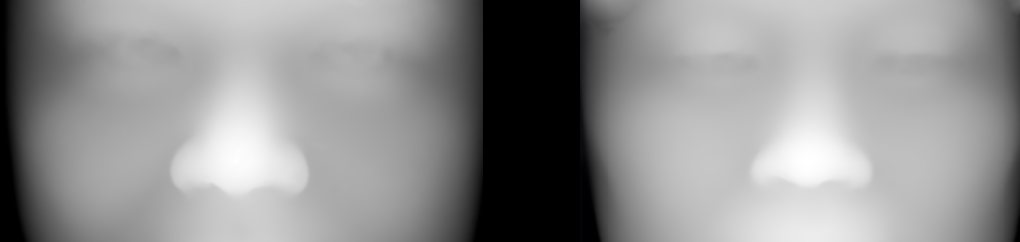

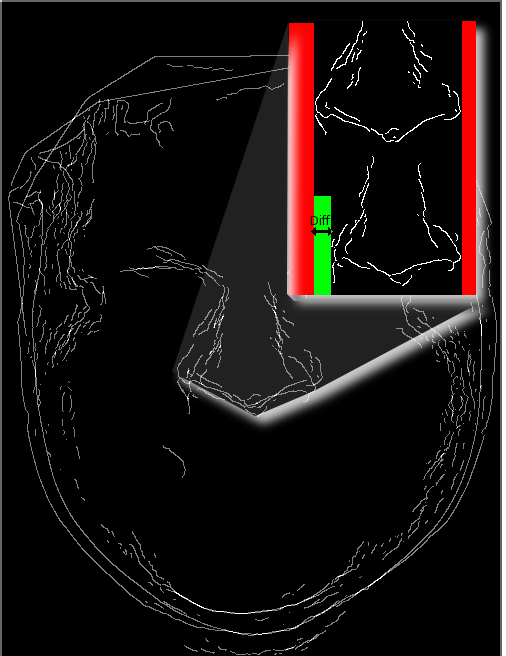

Problematic cases still exist nonetheless and I am trying to find ways to get past them. There are clearly problematic cases, such as this first occurrence in the 25th pair, where edge detection is not being consistent enough to make these distances unambiguous. In such cases, Euclidean measures — just like their geodesic counterparts — are likely to fail, incorrectly claiming the noses to be of different people.

A modified edge detection-based mechanism is now in place so as to serve as another classifier. It does fail as a classifier when edge detection fails, as shown in the image.

Filed under:

Filed under:

hen BT goes down, it goes down big time. A year ago BT erroneously disconnected my line, which only took 3 weeks to restore [

hen BT goes down, it goes down big time. A year ago BT erroneously disconnected my line, which only took 3 weeks to restore [

UNDAY brings an old favourite.

UNDAY brings an old favourite. sing a more brute force approach which takes into account a broader stochastic process, performance seems to have improved to the point where for 50 pairs (100 images) there are just 2 mistakes using the FMM-based approach and 1 using the triangles counting approach. This seems to have some real potential, even though it is slow for the time being (partly because 4 methods are being tested at the same time, including two GMDS-based approaches).

sing a more brute force approach which takes into account a broader stochastic process, performance seems to have improved to the point where for 50 pairs (100 images) there are just 2 mistakes using the FMM-based approach and 1 using the triangles counting approach. This seems to have some real potential, even though it is slow for the time being (partly because 4 methods are being tested at the same time, including two GMDS-based approaches).