On interacting with various file servers or client servers as through they are local

Background

ometimes we may wish to allow users, logged in remotely (away from their main workstations) or wishing to connect to another host where essential files are located, to access those files. A convenient way to achieve this without proprietary protocols is SSH in SCP ‘mode’, meaning that OpenSSH is being used to gather information about remote filesystems and pass files across the network upon demand. There is a convenient ways to manage this in UNIXy file managers These are unified by the universal command-line syntax, but the front ends may vary and be based on Qt, GTK, etc. Here is a demonstration of how it is to be achieved in Dolphin (KDE) in order to remotely log in to an SSH-enabled (running

ometimes we may wish to allow users, logged in remotely (away from their main workstations) or wishing to connect to another host where essential files are located, to access those files. A convenient way to achieve this without proprietary protocols is SSH in SCP ‘mode’, meaning that OpenSSH is being used to gather information about remote filesystems and pass files across the network upon demand. There is a convenient ways to manage this in UNIXy file managers These are unified by the universal command-line syntax, but the front ends may vary and be based on Qt, GTK, etc. Here is a demonstration of how it is to be achieved in Dolphin (KDE) in order to remotely log in to an SSH-enabled (running sshd) host.

Connecting to the Server

File managers typically have an address bar, which in simplified interfaces are not editable unless one clicks the universally-accepted CTRL+L (for location), which then replaces a breadcrumbs-styled interface with an editable line. Here is an example of Dolphin before and after.

Now, enter the address of the server with the syntax understood by the file manager, e.g. for KDE:

fish://[USER name]@[SERVER address]

One can add ":[DIRECTORY path]" to ascend/descend in the accessed server.

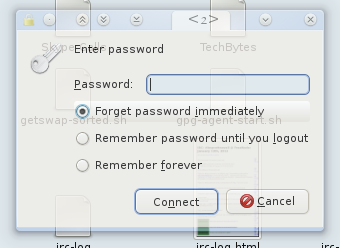

The syntax is the same for Konqueror and a few other file managers, with the exception of the “fish://” part, which is handled by kio. Here is what the password prompt/dialogue may look like.

Syntax may vary where the protocol, SSH in this case, is specified, but the port number if always the same and Nautilus can handle this too. Once the remote filesystem is shows like a local file system it can be dragged into the shortcuts pane, bookmarked, or whatever the file manager permits for fast access, including the facility for remembering the password/s (handled by kwallet in KDE).

The Nautilus Way

I have installed Nautilus to document the process for Nautilus as well.

The process can be done with the GUI in Nautilus. This is to be achieved slightly differently and it take a little longer. Here are simple steps:

Step 1

Open Nautilus (installed under KDE in this instance, using Qt Curve in this case).

Step 2

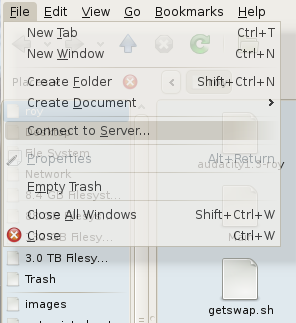

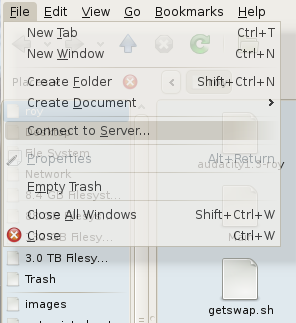

Collapse the “File” menu.

Click “Connect to Server…”

Step 3

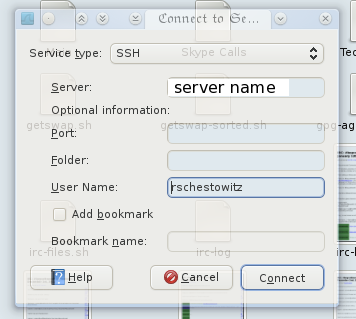

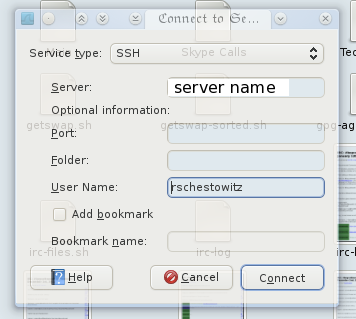

Choose SSH, unless another protocol is desired in this case.

Step 4

Enter the server name (or IP address). Optionally enter the port number (if different from the standard port for this protocol), path (called “Folder”) and of course the username (“User Name”). Shortcuts can be created by using the options beneath.

Step 5

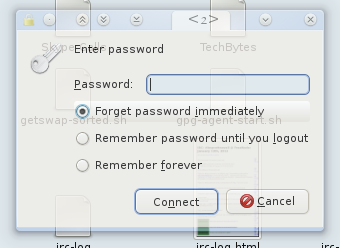

Finally, enter the password and access is then granted.

By keeping passwords in memory or disk one can more rapidly and transparently access the remote drive again, reaching files seamlessly.

Working on Files Remotely

This is where a lot of power can be derived from the above process. Using KIO slaves in KDE, for instance, files can be opened as though they are stored locally and when an application saves (applied changes) to those files, KIO will push the changed file into the remote file store (working in the background). This means that headless servers can be interacted with as though they are part of the machines that access them. No need for X Server, either. Since many machines out there are configured to be minimal (no graphical desktop), this sure proves handy.

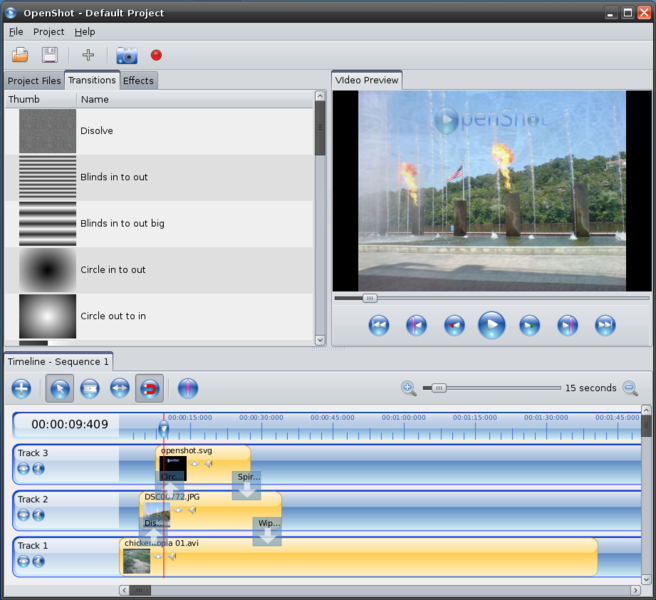

penShot is a fantastic video editor for those — who like myself — are not video editing experts and cannot afford to spend hours just learning how to use a video editing program. OpenShot has all the basic functionality and it is coupled with the power of Blender to help achieve rather pretty effects and transitions. Having tried numerous other video editors for GNU/Linux and wasted many hours just trying to learn them (or failing to find some missing functionality), I can warmly recommend OpenShot.

penShot is a fantastic video editor for those — who like myself — are not video editing experts and cannot afford to spend hours just learning how to use a video editing program. OpenShot has all the basic functionality and it is coupled with the power of Blender to help achieve rather pretty effects and transitions. Having tried numerous other video editors for GNU/Linux and wasted many hours just trying to learn them (or failing to find some missing functionality), I can warmly recommend OpenShot.

Filed under:

Filed under:

But what about all the valuable news stories? Not too long ago DesktopLinux and LinuxDevices went dark. The domains were left to rot and the articles accumulated there for over a decade became inaccessible, essentially deleted from the Web. It has come to our attention, after some inquiries with relevant individuals, that people who contributed to DesktopLinux and LinuxDevices — including the founders — do wish for the content to return online of for the copyrights or the articles to be changed — explicitly or implicitly — such that all the articles can be brought back to the Web by those to whom DesktopLinux and LinuxDevices were important resources or a matter of personal contribution.

But what about all the valuable news stories? Not too long ago DesktopLinux and LinuxDevices went dark. The domains were left to rot and the articles accumulated there for over a decade became inaccessible, essentially deleted from the Web. It has come to our attention, after some inquiries with relevant individuals, that people who contributed to DesktopLinux and LinuxDevices — including the founders — do wish for the content to return online of for the copyrights or the articles to be changed — explicitly or implicitly — such that all the articles can be brought back to the Web by those to whom DesktopLinux and LinuxDevices were important resources or a matter of personal contribution.

ast year I asked Dr. Stallman to comment on what Ubuntu/Canonical had done with regards to privacy and since then he has expressed his view very clearly, most recently in this video.

ast year I asked Dr. Stallman to comment on what Ubuntu/Canonical had done with regards to privacy and since then he has expressed his view very clearly, most recently in this video. ometimes we may wish to allow users, logged in remotely (away from their main workstations) or wishing to connect to another host where essential files are located, to access those files. A convenient way to achieve this without proprietary protocols is SSH in SCP ‘mode’, meaning that OpenSSH is being used to gather information about remote filesystems and pass files across the network upon demand. There is a convenient ways to manage this in UNIXy file managers These are unified by the universal command-line syntax, but the front ends may vary and be based on Qt, GTK, etc. Here is a demonstration of how it is to be achieved in Dolphin (KDE) in order to remotely log in to an SSH-enabled (running

ometimes we may wish to allow users, logged in remotely (away from their main workstations) or wishing to connect to another host where essential files are located, to access those files. A convenient way to achieve this without proprietary protocols is SSH in SCP ‘mode’, meaning that OpenSSH is being used to gather information about remote filesystems and pass files across the network upon demand. There is a convenient ways to manage this in UNIXy file managers These are unified by the universal command-line syntax, but the front ends may vary and be based on Qt, GTK, etc. Here is a demonstration of how it is to be achieved in Dolphin (KDE) in order to remotely log in to an SSH-enabled (running

ackups that are robust and resistant to disasters like fire should be distributed. Backing up by writing to external drives is good, but it is not sufficient. Here is how I back up my main machine. Backup is quick in a smaller number of rather large files (not too large as some file systems cannot cope with those), so one trick goes like this:

ackups that are robust and resistant to disasters like fire should be distributed. Backing up by writing to external drives is good, but it is not sufficient. Here is how I back up my main machine. Backup is quick in a smaller number of rather large files (not too large as some file systems cannot cope with those), so one trick goes like this: