The Dangers of an Advertising Monopoly

HERE have been some heated talks recently about the market distribution in the online advertising sector. An observation worth making is the fact that most companies are in the business of making other companies runs out of business, whether deliberately or not.

HERE have been some heated talks recently about the market distribution in the online advertising sector. An observation worth making is the fact that most companies are in the business of making other companies runs out of business, whether deliberately or not.

With the rise of software as a service, many business rely not on acquisition costs and not on subscription for revenue, either. They use advertisiing instead. It appeals to newcomers and facilitates rapid expansion. But what happens when these businesses rely on a middleman for advertising? What happens when the advertiser itself in among those that compete against Web-based services that rely on it?

Sadly, many businesses rely on companies such as Yahoo and Google, which manage their advertising and connect them with the advertiser. Both ends are customers — the advertiser and the service. The middman gains the most. It is hard to compete with companies such as Yahoo and Google when they in fact make pure profit from advertising. It is almost as though any business that uses a middleman for advertising is sharing the revenue with a competitor. The margins simply cannot be compared.

Sadly, many businesses rely on companies such as Yahoo and Google, which manage their advertising and connect them with the advertiser. Both ends are customers — the advertiser and the service. The middman gains the most. It is hard to compete with companies such as Yahoo and Google when they in fact make pure profit from advertising. It is almost as though any business that uses a middleman for advertising is sharing the revenue with a competitor. The margins simply cannot be compared.

To use an example, if a company uses Yahoo for advertising in its specialised CMS, then Yahoo gets a share of the profits. If Yahoo wanted to compete head-to-head, it would not be subjected to the same third-party ‘taxation’. Therefore, it would find it easier to compete.

With this little load of my mind, perhaps it’s worth adding that advertising will always remain a controversial thing. It is a form of brainwash. Marketing lies.

Filed under:

Filed under:  According to an article that I recently read, the Internet could one day be broken down into

According to an article that I recently read, the Internet could one day be broken down into

have just entered a squash competition, which is due to begin in October. I hope I can make a decent run for a change. I tend to lose in the early rounds, judging by previous years. While I’m experienced at tennis, I rarely get the chance to practice squash. Moreover, those who participate are in the competition are rather good in general. They seem to be skilled with the swing and are able to see the game from a different and more advanced perspective. Endurance and strength cannot defeat these qualities.

have just entered a squash competition, which is due to begin in October. I hope I can make a decent run for a change. I tend to lose in the early rounds, judging by previous years. While I’m experienced at tennis, I rarely get the chance to practice squash. Moreover, those who participate are in the competition are rather good in general. They seem to be skilled with the swing and are able to see the game from a different and more advanced perspective. Endurance and strength cannot defeat these qualities. I am very much pleased to have gotten an opportunity to work with a group of talented people. Up, close and person, figures whom you were taught to dislike (principally Calacanis) are quite friendly and kind. They are not the devils that you were led to believe they are. What bothers me most are some recent accusations that come from conspiracy theorists. Some would argue that Netscape is trying to

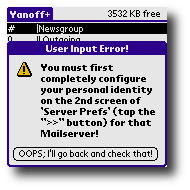

I am very much pleased to have gotten an opportunity to work with a group of talented people. Up, close and person, figures whom you were taught to dislike (principally Calacanis) are quite friendly and kind. They are not the devils that you were led to believe they are. What bothers me most are some recent accusations that come from conspiracy theorists. Some would argue that Netscape is trying to  HY is it that so many user interfaces simply fail to work? It’s because users are permitted to take shortcuts and ignore the instructions. This is in fact

HY is it that so many user interfaces simply fail to work? It’s because users are permitted to take shortcuts and ignore the instructions. This is in fact